%matplotlib inline

from preamble import *

Working with Text Data¶

- Types of Data Represented as Strings

- Example Application: Sentiment Analysis of Movie Reviews

- Representing Text Data as a Bag of Words

- Applying Bag-of-Words to a Toy Dataset

- Bag-of-Words for Movie Reviews

- Stopwords

- Rescaling the Data with tf–idf

- Investigating Model Coefficients

- Bag-of-Words with More Than One Word (n-Grams)

- Advanced Tokenization, Stemming, and Lemmatization

- Topic Modeling and Document Clustering

- Latent Dirichlet Allocation

- Summary and Outlook

1. Types of data represented as strings¶

There are four kinds of string data you might see:

Categorical data

- Categorical data is data that comes from a fixed list - know exactly the number of unique values

Free strings that can be semantically mapped to categories

- The string can be typed by human and it can map with some defined categories.

- This kind of preprocessing of strings can take a lot of manual effort and is not easily automated. If you are in a position where you can influence data collection, we highly recommend avoiding manually entered values for concepts that are better captured using categorical variables.

Structured string data

- Manually entered values do not correspond to fixed categories, but still have some underlying structure, like addresses, names of places or people, dates, telephone numbers, or other identifiers.

- These kinds of strings are often very hard to parse, and their treatment is highly dependent on context and domain.

- Text data

- phrases or sentences.

2. Example application: Sentiment analysis of movie reviews¶

This dataset contains the text of the reviews, together with a label that indicates whether a review is “positive” or “negative.” Website itself contains ratings from 1 to 10. To simplify the modeling, this annotation is summarized as a two-class classification dataset where reviews with a score of 6 or higher are labeled as positive, and the rest as negative.

!wget -nc http://ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz -P data

!tar xzf data/aclImdb_v1.tar.gz --skip-old-files -C data

!tree -dL 2 data/aclImdb

!rm -r data/aclImdb/train/unsup

from sklearn.datasets import load_files

reviews_train = load_files("data/aclImdb/train/")

# load_files returns a bunch, containing training texts and training labels

text_train, y_train = reviews_train.data, reviews_train.target

print("type of text_train: {}".format(type(text_train)))

print("length of text_train: {}".format(len(text_train)))

print("text_train[6]:\n{}".format(text_train[6]))

text_train = [doc.replace(b"<br />", b" ") for doc in text_train]

np.unique(y_train)

print("Samples per class (training): {}".format(np.bincount(y_train)))

reviews_test = load_files("data/aclImdb/test/")

text_test, y_test = reviews_test.data, reviews_test.target

print("Number of documents in test data: {}".format(len(text_test)))

print("Samples per class (test): {}".format(np.bincount(y_test)))

text_test = [doc.replace(b"<br />", b" ") for doc in text_test]

The task we want to solve is as follows: given a review, we want to assign the label “positive” or “negative” based on the text content of the review.

However, the text data is not in a format that a machine learning model can handle. We need to convert the string representation of the text into a numeric representation that we can apply our machine learning algorithms to.¶

3. Representing text data as Bag of Words¶

- Corpus or Text corpus is a collection of text sources.

The bag-of-words model¶

- A way of representing text data when modeling text with machine learning algorithms

- A way of extracting features from text for use in modeling, such as with machine learning algorithms.

A bag-of-words is a representation of text that describes the occurrence of words within a document. It involves two things:

- A vocabulary of known words.

- A measure of the presence of known words.

It is called a “bag” of words, because any information about the order or structure of words in the document is discarded. The model is only concerned with whether known words occur in the document, not where in the document.

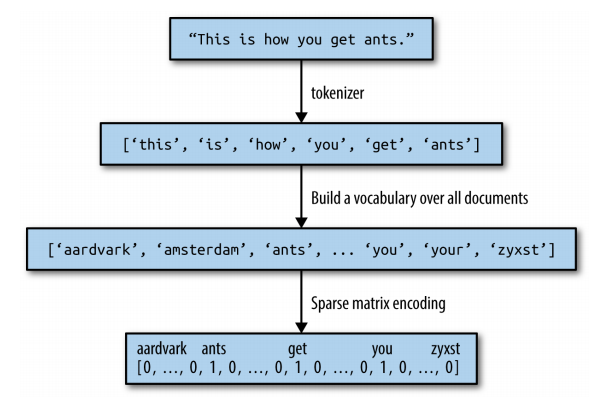

Computing the bag-of-words representation for a corpus of documents consists of the following three steps:

- Tokenization. Split each document into the words that appear in it (called tokens), for example by splitting them on whitespace and punctuation.

- Vocabulary building. Collect a vocabulary of all words that appear in any of the documents, and number them (say, in alphabetical order).

- Encoding. For each document, count how often each of the words in the vocabulary appear in this document.

Applying bag-of-words to a toy dataset¶

bards_words =["The fool doth think he is wise,",

"but the wise man knows himself to be a fool"]

from sklearn.feature_extraction.text import CountVectorizer

vect = CountVectorizer()

vect.fit(bards_words)

print("Vocabulary size: {}".format(len(vect.vocabulary_)))

print("Vocabulary content:\n {}".format(vect.vocabulary_))

bag_of_words = vect.transform(bards_words)

print("bag_of_words: {}".format(repr(bag_of_words)))

print("Dense representation of bag_of_words:\n{}".format(

bag_of_words.toarray()))

Bag-of-word for movie reviews¶

vect = CountVectorizer().fit(text_train)

X_train = vect.transform(text_train)

print("X_train:\n{}".format(repr(X_train)))

feature_names = vect.get_feature_names()

print("Number of features: {}".format(len(feature_names)))

print("First 20 features:\n{}".format(feature_names[:20]))

print("Features 20010 to 20030:\n{}".format(feature_names[20010:20030]))

print("Every 2000th feature:\n{}".format(feature_names[::2000]))

from sklearn.model_selection import cross_val_score

from sklearn.linear_model import LogisticRegression

scores = cross_val_score(LogisticRegression(), X_train, y_train, cv=5)

print("Mean cross-validation accuracy: {:.2f}".format(np.mean(scores)))

from sklearn.model_selection import GridSearchCV

param_grid = {'C': [0.001, 0.01, 0.1, 1, 10]}

grid = GridSearchCV(LogisticRegression(), param_grid, cv=5)

grid.fit(X_train, y_train)

print("Best cross-validation score: {:.2f}".format(grid.best_score_))

print("Best parameters: ", grid.best_params_)

X_test = vect.transform(text_test)

print("Test score: {:.2f}".format(grid.score(X_test, y_test)))

vect = CountVectorizer(min_df=5).fit(text_train)

X_train = vect.transform(text_train)

print("X_train with min_df: {}".format(repr(X_train)))

feature_names = vect.get_feature_names()

print("First 50 features:\n{}".format(feature_names[:50]))

print("Features 20010 to 20030:\n{}".format(feature_names[20010:20030]))

print("Every 700th feature:\n{}".format(feature_names[::700]))

grid = GridSearchCV(LogisticRegression(), param_grid, cv=5)

grid.fit(X_train, y_train)

print("Best cross-validation score: {:.2f}".format(grid.best_score_))

4. Stop-words¶

Stop words means that it is a very common words in a language. It does not help on most of NLP problem such as semantic analysis, classification etc.

Stop words removal process is a one of the important step to have a better input for any models.

from sklearn.feature_extraction.text import ENGLISH_STOP_WORDS

print("Number of stop words: {}".format(len(ENGLISH_STOP_WORDS)))

print("Every 10th stopword:\n{}".format(list(ENGLISH_STOP_WORDS)[::10]))

# Specifying stop_words="english" uses the built-in list.

# We could also augment it and pass our own.

vect = CountVectorizer(min_df=5, stop_words="english").fit(text_train)

X_train = vect.transform(text_train)

print("X_train with stop words:\n{}".format(repr(X_train)))

grid = GridSearchCV(LogisticRegression(), param_grid, cv=5)

grid.fit(X_train, y_train)

print("Best cross-validation score: {:.2f}".format(grid.best_score_))

Removing the stopwords in the list can only decrease the number of features by the length of the list, but it might lead to an improvement in performance.

5. Rescaling the Data with tf-idf¶

Instead of dropping features that are deemed unimportant, another approach is to rescale features by how informative we expect them to be. One of the most common ways to do this is using the term frequency–inverse document frequency (tf–idf) method.

\begin{equation*} \text{tfidf}(w, d) = \text{tf} \log\big(\frac{N + 1}{N_w + 1}\big) + 1 \end{equation*}- N is the number of documents in the training set,

- Nw is the number of documents in the training set that the word w appears in

- tf (the term frequency) is the number of times that the word w appears in the query document d (the document you want to transform or encode).

The intuition of this method is to give high weight to any term that appears often in a particular document, but not in many documents in the corpus. If a word appears often in a particular document, but not in very many documents, it is likely to be very descriptive of the content of that document.

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.pipeline import make_pipeline

pipe = make_pipeline(TfidfVectorizer(min_df=5, norm=None),

LogisticRegression())

param_grid = {'logisticregression__C': [0.001, 0.01, 0.1, 1, 10]}

grid = GridSearchCV(pipe, param_grid, cv=5)

grid.fit(text_train, y_train)

print("Best cross-validation score: {:.2f}".format(grid.best_score_))

We can also inspect which words tf–idf found most important. Keep in mind that the tf–idf scaling is meant to find words that distinguish documents, but it is a purely unsupervised technique.

vectorizer = grid.best_estimator_.named_steps["tfidfvectorizer"]

# transform the training dataset:

X_train = vectorizer.transform(text_train)

# find maximum value for each of the features over dataset:

max_value = X_train.max(axis=0).toarray().ravel()

sorted_by_tfidf = max_value.argsort()

# get feature names

feature_names = np.array(vectorizer.get_feature_names())

print("Features with lowest tfidf:\n{}".format(

feature_names[sorted_by_tfidf[:20]]))

print("Features with highest tfidf: \n{}".format(

feature_names[sorted_by_tfidf[-20:]]))

Features with low tf–idf are those that either are very commonly used across documents or are only used sparingly, and only in very long documents.¶

Interestingly, many of the high-tf–idf features actually identify certain shows or movies. These terms only appear in reviews for this particular show or franchise, but tend to appear very often in these particular reviews¶

sorted_by_idf = np.argsort(vectorizer.idf_)

print("Features with lowest idf:\n{}".format(

feature_names[sorted_by_idf[:100]]))

6. Investigating model coefficients¶

Finally, let’s look in a bit more detail into what our logistic regression model actually learned from the data. Because there are so many features, ex:27,271 after removing the infrequent ones, we clearly cannot look at all of the coefficients at the same time. However, we can look at the largest coefficients, and see which words these correspond to. We will use the last model that we trained, based on the tf–idf features.

mglearn.tools.visualize_coefficients(

grid.best_estimator_.named_steps["logisticregression"].coef_,

feature_names, n_top_features=40)

Largest and smallest coefficients of logistic regression trained on tf-idf features is shown in figure above

7. Bag of words with more than one word (n-grams)¶

- One of the main disadvantages of using a bag-of-words representation is that word order is completely discarded. Therefore, the two strings “it’s bad, not good at all” and “it’s good, not bad at all” have exactly the same representation, even though the meanings are inverted.

- We can change the range of tokens that are considered as features by changing the ngram_range parameter. The ngram_range parameter is a tuple, consisting of the minimum length and the maximum length of the sequences of tokens that are considered.

print("bards_words:\n{}".format(bards_words))

cv = CountVectorizer(ngram_range=(1, 1)).fit(bards_words)

print("Vocabulary size: {}".format(len(cv.vocabulary_)))

print("Vocabulary:\n{}".format(cv.get_feature_names()))

cv = CountVectorizer(ngram_range=(2, 2)).fit(bards_words)

print("Vocabulary size: {}".format(len(cv.vocabulary_)))

print("Vocabulary:\n{}".format(cv.get_feature_names()))

print("Transformed data (dense):\n{}".format(cv.transform(bards_words).toarray()))

For most applications

- The minimum number of tokens should be one, as single words often capture a lot of meaning.

- Adding bigrams helps in most cases.

- Adding longer sequences—up to 5-grams—might help too, but this will lead to an explosion of the number of features and might lead to overfitting, as there will be many very specific features.

In principle, the number of bigrams could be the number of unigrams squared and the number of trigrams could be the number of unigrams to the power of three, leading to very large feature spaces.

In practice, the number of higher n-grams that actually appear in the data is much smaller, because of the structure of the (English) language, though it is still large.

cv = CountVectorizer(ngram_range=(1, 3)).fit(bards_words)

print("Vocabulary size: {}".format(len(cv.vocabulary_)))

print("Vocabulary:\n{}".format(cv.get_feature_names()))

pipe = make_pipeline(TfidfVectorizer(min_df=5), LogisticRegression())

# running the grid-search takes a long time because of the

# relatively large grid and the inclusion of trigrams

param_grid = {'logisticregression__C': [0.001, 0.01, 0.1, 1, 10, 100],

"tfidfvectorizer__ngram_range": [(1, 1), (1, 2), (1, 3)]}

grid = GridSearchCV(pipe, param_grid, cv=5)

grid.fit(text_train, y_train)

print("Best cross-validation score: {:.2f}".format(grid.best_score_))

print("Best parameters:\n{}".format(grid.best_params_))

# extract scores from grid_search

scores = grid.cv_results_['mean_test_score'].reshape(-1, 3).T

# visualize heat map

heatmap = mglearn.tools.heatmap(

scores, xlabel="C", ylabel="ngram_range", cmap="viridis", fmt="%.3f",

xticklabels=param_grid['logisticregression__C'],

yticklabels=param_grid['tfidfvectorizer__ngram_range'])

plt.colorbar(heatmap)

From the heat map we can see that using bigrams increases performance quite a bit, while adding trigrams only provides a very small benefit in terms of accuracy.

# extract feature names and coefficients

vect = grid.best_estimator_.named_steps['tfidfvectorizer']

feature_names = np.array(vect.get_feature_names())

coef = grid.best_estimator_.named_steps['logisticregression'].coef_

mglearn.tools.visualize_coefficients(coef, feature_names, n_top_features=40)

plt.ylim(-22, 22)

# find 3-gram features

mask = np.array([len(feature.split(" ")) for feature in feature_names]) == 3

# visualize only 3-gram features

mglearn.tools.visualize_coefficients(coef.ravel()[mask],

feature_names[mask], n_top_features=40)

plt.ylim(-22, 22)

8. Advanced tokenization, stemming and lemmatization¶

- Tokenization: One particular step that is often improved in more sophisticated text-processing applications. This step defines what constitutes a word for the purpose of feature extraction => separate sentences into words to extract feature.

- Stemming: Using word stem (normalize form of word) to reduce the form of word like "replace", "replaced", "replacement", "replaces", and "replacing" => reduce vocabulary, improve performances.

- Lemmatization: We care about word forms is used (an explicit and human-verified system), and the role of the word in the sentence is taken into account

import spacy

import nltk

# load spacy's English-language models

en_nlp = spacy.load('en')

# instantiate nltk's Porter stemmer

stemmer = nltk.stem.PorterStemmer()

# define function to compare lemmatization in spacy with stemming in nltk

def compare_normalization(doc):

# tokenize document in spacy

doc_spacy = en_nlp(doc)

# print lemmas found by spacy

print("Lemmatization:")

print([token.lemma_ for token in doc_spacy])

# print tokens found by Porter stemmer

print("Stemming:")

print([stemmer.stem(token.norm_.lower()) for token in doc_spacy])

compare_normalization(u"Our meeting today was worse than yesterday, "

"I'm scared of meeting the clients tomorrow.")

Stemming is always restricted to trimming the word to a stem, so "was" becomes "wa", while lemmatization can retrieve the correct base verb form, "be". Similarly, lemmatization can normalize "worse" to "bad", while stemming produces "wors". Another major difference is that stemming reduces both occurrences of "meeting" to "meet".

# Technicallity: we want to use the regexp based tokenizer

# that is used by CountVectorizer and only use the lemmatization

# from SpaCy. To this end, we replace en_nlp.tokenizer (the SpaCy tokenizer)

# with the regexp based tokenization

import re

# regexp used in CountVectorizer:

regexp = re.compile('(?u)\\b\\w\\w+\\b')

# load spacy language model

en_nlp = spacy.load('en', disable=['parser', 'ner'])

old_tokenizer = en_nlp.tokenizer

# replace the tokenizer with the preceding regexp

en_nlp.tokenizer = lambda string: old_tokenizer.tokens_from_list(

regexp.findall(string))

# create a custom tokenizer using the SpaCy document processing pipeline

# (now using our own tokenizer)

def custom_tokenizer(document):

doc_spacy = en_nlp(document)

return [token.lemma_ for token in doc_spacy]

# define a count vectorizer with the custom tokenizer

lemma_vect = CountVectorizer(tokenizer=custom_tokenizer, min_df=5)

# transform text_train using CountVectorizer with lemmatization

X_train_lemma = lemma_vect.fit_transform(text_train)

print("X_train_lemma.shape: {}".format(X_train_lemma.shape))

# standard CountVectorizer for reference

vect = CountVectorizer(min_df=5).fit(text_train)

X_train = vect.transform(text_train)

print("X_train.shape: {}".format(X_train.shape))

# build a grid-search using only 1% of the data as training set:

from sklearn.model_selection import StratifiedShuffleSplit

param_grid = {'C': [0.001, 0.01, 0.1, 1, 10]}

cv = StratifiedShuffleSplit(n_splits=5, test_size=0.99,

train_size=0.01, random_state=0)

grid = GridSearchCV(LogisticRegression(), param_grid, cv=cv)

# perform grid search with standard CountVectorizer

grid.fit(X_train, y_train)

print("Best cross-validation score "

"(standard CountVectorizer): {:.3f}".format(grid.best_score_))

# perform grid search with Lemmatization

grid.fit(X_train_lemma, y_train)

print("Best cross-validation score "

"(lemmatization): {:.3f}".format(grid.best_score_))

9. Topic Modeling and Document Clustering¶

One particular technique that is often applied to text data is topic modeling, which is an umbrella term describing the task of assigning each document to one or multiple topics, usually without supervision.

- A good example for this is news data, which might be categorized into topics like “politics,” “sports,” “finance,” and so on

- Each of the components we learn then corresponds to one topic, and the coefficients of the components in the representation of a document tell us how strongly related that document is to a particular topic.

Often, when people talk about topic modeling, they refer to one particular decomposition method called Latent Dirichlet Allocation

Latent Dirichlet Allocation - LDA¶

- The LDA model tries to find groups of words (the topics) that appear together frequently. LDA also requires that each document can be understood as a “mixture” of a subset of the topics.

vect = CountVectorizer(max_features=10000, max_df=.15)

X = vect.fit_transform(text_train)

from sklearn.decomposition import LatentDirichletAllocation

lda = LatentDirichletAllocation(n_topics=10, learning_method="batch",

max_iter=25, random_state=0)

# We build the model and transform the data in one step

# Computing transform takes some time,

# and we can save time by doing both at once

document_topics = lda.fit_transform(X)

print("lda.components_.shape: {}".format(lda.components_.shape))

# for each topic (a row in the components_), sort the features (ascending).

# Invert rows with [:, ::-1] to make sorting descending

sorting = np.argsort(lda.components_, axis=1)[:, ::-1]

# get the feature names from the vectorizer:

feature_names = np.array(vect.get_feature_names())

# Print out the 10 topics:

mglearn.tools.print_topics(topics=range(10), feature_names=feature_names,

sorting=sorting, topics_per_chunk=5, n_words=10)

lda100 = LatentDirichletAllocation(n_topics=100, learning_method="batch",

max_iter=25, random_state=0)

document_topics100 = lda100.fit_transform(X)

topics = np.array([7, 16, 24, 25, 28, 36, 37, 41, 45, 51, 53, 54, 63, 89, 97])

sorting = np.argsort(lda100.components_, axis=1)[:, ::-1]

feature_names = np.array(vect.get_feature_names())

mglearn.tools.print_topics(topics=topics, feature_names=feature_names,

sorting=sorting, topics_per_chunk=5, n_words=20)

# sort by weight of "music" topic 45

music = np.argsort(document_topics100[:, 45])[::-1]

# print the five documents where the topic is most important

for i in music[:10]:

# show first two sentences

print(b".".join(text_train[i].split(b".")[:2]) + b".\n")

fig, ax = plt.subplots(1, 2, figsize=(10, 10))

topic_names = ["{:>2} ".format(i) + " ".join(words)

for i, words in enumerate(feature_names[sorting[:, :2]])]

# two column bar chart:

for col in [0, 1]:

start = col * 50

end = (col + 1) * 50

ax[col].barh(np.arange(50), np.sum(document_topics100, axis=0)[start:end])

ax[col].set_yticks(np.arange(50))

ax[col].set_yticklabels(topic_names[start:end], ha="left", va="top")

ax[col].invert_yaxis()

ax[col].set_xlim(0, 2000)

yax = ax[col].get_yaxis()

yax.set_tick_params(pad=130)

plt.tight_layout()

- Topic models like LDA are interesting methods to understand large text corpora in the absence of labels—or, as here, even if labels are available. The LDA algorithm is randomized, though, and changing the random_state parameter can lead to quite different outcomes.

- While identifying topics can be helpful, any conclusions you draw from an unsupervised model should be taken with a grain of salt, and we recommend verifying your intuition by looking at the documents in a specific topic.

- The topics produced by the LDA.transform method can also sometimes be used as a compact representation for supervised learning.

Summary and Outlook¶

In particular for text classification tasks such as spam and fraud detection or sentiment analysis, bag-of-words representations provide a simple and powerful solution.

As is often the case in machine learning, the representation of the data is key in NLP applications, and inspecting the tokens and n-grams that are extracted can give powerful insights into the modeling process.

In text-processing applications, it is often possible to introspect models in a meaningful way, for both supervised and unsupervised tasks

As we discussed earlier, the classes CountVectorizer and TfidfVectorizer only implement relatively simple text-processing methods.

For more advanced text-processing methods, we recommend the Python packages spacy (a relatively new but very efficient and welldesigned package), nltk (a very well-established and complete but somewhat dated library), and gensim (an NLP package with an emphasis on topic modeling).

in text processing in recent years, which are outside of the scope of this book and relate to neural networks. The first is the use of continuous vector representations, also known as word vectors or distributed word representations, as implemented in the word2vec library.

Another direction in NLP that has picked up momentum in recent years is the use of recurrent neural networks (RNNs) for text processing. RNNs are a particularly powerful type of neural network that can produce output that is again text, in contrast to classification models that can only assign class labels. The ability to produce text as output makes RNNs well suited for automatic translation and summarization.

Comments !